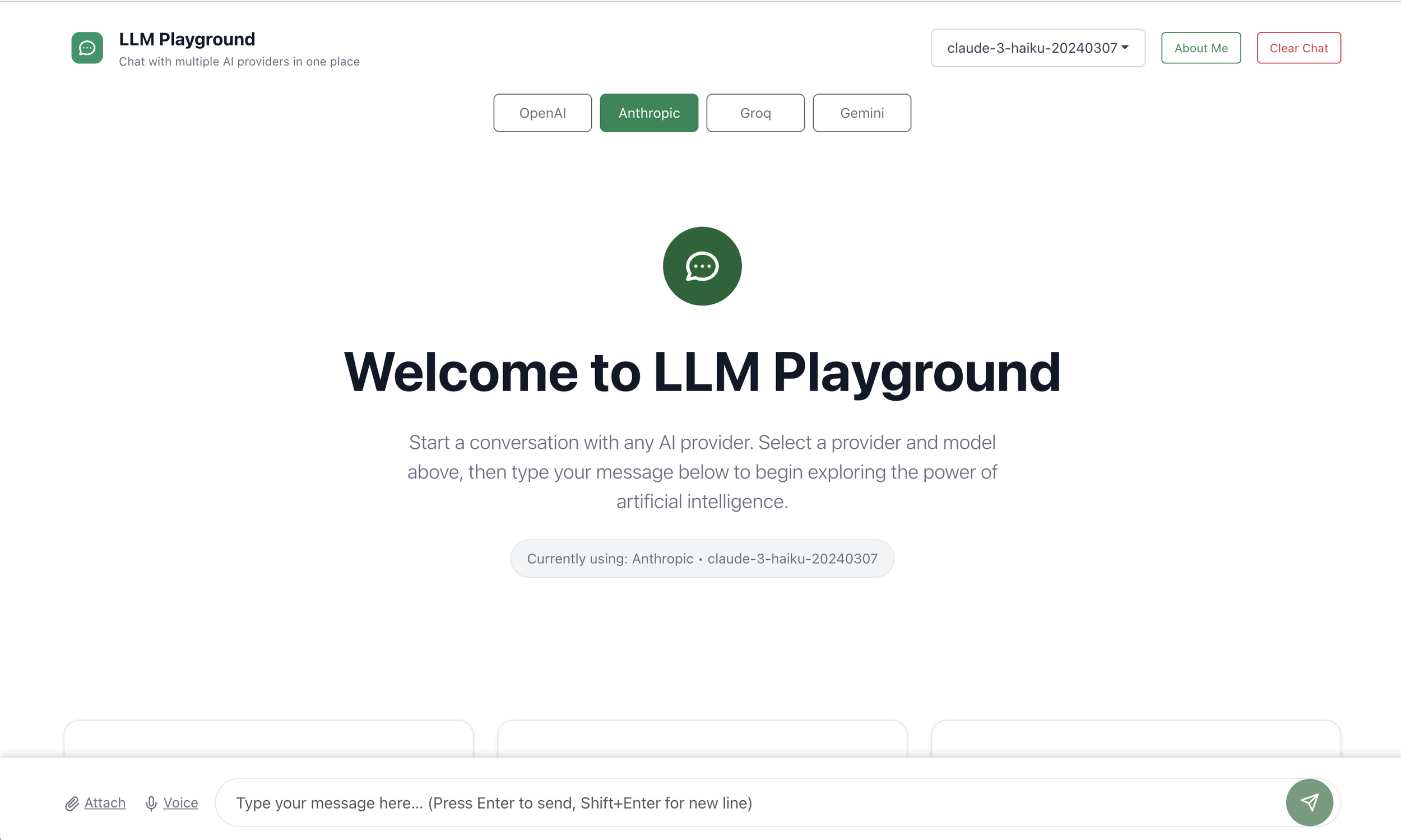

Unified interface to chat, compare, and prototype across OpenAI, Anthropic, Groq, Gemini, and other LLM providers.

AI tooling is fragmented. Teams need a single UI to test, compare and validate LLM behavior across providers without changing code each time.

Reduces prototyping time, helps spot provider-specific differences, and accelerates model selection for production.

Switch providers with a click, keep conversation context per model.

Pick specific provider models and toggle settings like temperature & max tokens.

Run the same prompt across multiple providers (roadmap feature).

Save, export and label experiments for reproducibility.